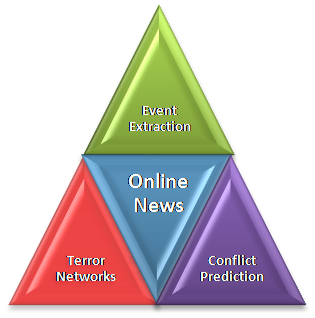

Project Description

With the explosion of online news over the past few years and with

recent advances in extracting information from text, the question we

study is: is it possible to forecast the outbreak of serious conflict

by monitoring news media from regions all over the world over extended

periods of time, extracting information about events from them, and

computationally analyzing the time series of extracted events? Our

research is motivated by this question.

Our research goals are

- To design information extraction techniques and build events data

sets for use by the entire scientific community.

- To use these events data and develop the algorithmic base for

making predictions about the onset of serious conflict.

- To construct explanatory models, building on the existing findings

from the scientific study of international relations.

Timely warning of the outbreak of serious conflict can be a key

element in conflict resolution. Early warning can provide the time

for state and non-state actors to intervene and prevent the outbreak.

We have developed new methods for event data extraction and analysis.

The raw material: online news

A key issue is the selection of online media outlets to be used as the

source of coding of events data. We have focused on the wire media

sources based on their electronic availability, their reputation, and

their coverage of international news: BBC, Agence France Presse (AFP),

Reuters, and the Associated Press. We have designed a suite of

programs to extract relevant news stories directly from the websites

of these news sources, as well as through aggregators such as Google

News and Lexis-Nexis. We have obtained over a million articles

pertaining to the Middle East from 1998 to the present for all the

four sources named above. The news articles are stored in a custom

relational database which we call the Ares news database. Such a

representation facilitates complex queries over the stories and allows

efficient access to them for further analysis. The Ares database also

offers support to link events data with the news stories they are

generated from. This database is a useful tool for extracting and

coding a diverse set of events data collections. We are currently

porting it to a web-based interface using Django, to help people build

customized news collections for their own analyses. We expect our

system to significantly aid in the creation of new events data sets

for analysis by both the computer science and political science

communities.

Filtering irrelevant stories

To our surprise, only about

10% of the stories retrieved from the news sources contain codeable

events. The majority of the stories do not pertain to conflict, or are

not factual reports of conflictual events (e.g., are opinion

pieces). Effectively filtering these stories is a key step in the

analysis. We have trained probabilistic Naive Bayes classifiers, SVM

classifiers, and OKAPI based classifiers to identify codeable stories

from uncodeable ones. Our classifier accuracies are about 94% with

recall of 94% and precision of 87%. The biggest challenge in the

filtering process is that models learned for a given period become

quickly outdated as events move on and new words arrive. Adapting our

models to non-stationary environments remains an open challenge.

Event extraction

We extract events from each codeable news story using a class of

programs called event extractors --- these programs analyze the text

of the story (including the headline) and gather four pieces of

information: who did what to whom and when. The actors are states or

organizations (e.g, Hamas or LET). The events are coded in a

conceptual hierarchy called WEIS, developed by political scientists

and used by them for manual coding of news stories. Each event type

has a scaled score (-10 (conflict) to +10 (cooperation)) associated

with it.

We examined the possibility of using the best-known event extractor

called TABARI developed by Phil Schrodt at the University of Kansas

(with support of the NSF) for our project. TABARI is tuned for the

Reuters data set from 1979 to 1999. It can code simple declarative

sentences well, but fails to detect events for sentences in the

passive voice, and for complex sentences structures with relative

clauses. Such complex sentences occur often in our sources, making

parsing of the sentences a necessary step.

We have devised a new sentence simplifier that uses the Stanford open

source NLP parser and generates simple declarative sentences from more

complex ones. The accuracy of TABARI coding improves significantly

with such simplification (25 out of 200 Reuters sentences from

September 1997 were codeable without simplification; with

simplification TABARI coded 78 of these sentences). We implemented our

own event coder to overcome the limitations of TABARI using

conditional random fields (CRF). To get high accuracies in the

identification of actors and targets in wire stories, we use standard

named-entity recognition methods. We train a probabilistic model for

named entity recognition from a high-quality hand-labeled data set.

We use sentence simplification in conjunction with actor/target

identification to perform as well as human coders on this task. We

have designed a CRF technique for classifying main verbs in

event-containing sentences into the 22 action categories of the WEIS

code. We were able to obtain accuracies of 72% (recall of 70% and

precision of 91%) on Reuters sentences for the event code 22 (force)

category. The performance of TABARI for the same benchmark was 22%

accuracy (with recall of 8% and precision of 50%).

Analyzing events data

We analyzed a previously gathered event data collection coded from

Reuters stories from eight countries in the Gulf region of the Middle

East for the period 1979 to 1999. We aggregated the scaled event

scores for these eight countries on a biweekly basis. Each point in

the time series represents the average event scores for a 2 week

period. This series is highly non-stationary, so traditional methods

of prediction do not perform well. We use wavelets to do a multiscale

decomposition of the signal, and find discontinuities in the

series. These discontinuities occur across all scales and are

identified using Mallat's modulus maximum technique. Surprisingly,

these discontinuities correspond almost exactly to major conflicts in

the region! These include the start and end of the Iran/Iraq war, the

Gulf War and the first and second Intifada. Further, the wavelet

coefficients preceding a singularity or discontinuity exhibit trends

that allow us to predict conflicts with high probability about 8 weeks

before actual hostilities break out. We have repeated our analysis

technique on Cold War events data over a period of fifteen years and

developed a computational chronology of key events in that period. We

have also extracted and analyze events that led up to the 2003 Iraq

war. Our methods allow us to provide a computational account of

history of this period based on events gathered from wire sources

alone.