Different camera position

Different camera position

Segmen- tation mask

Segmen- tation mask

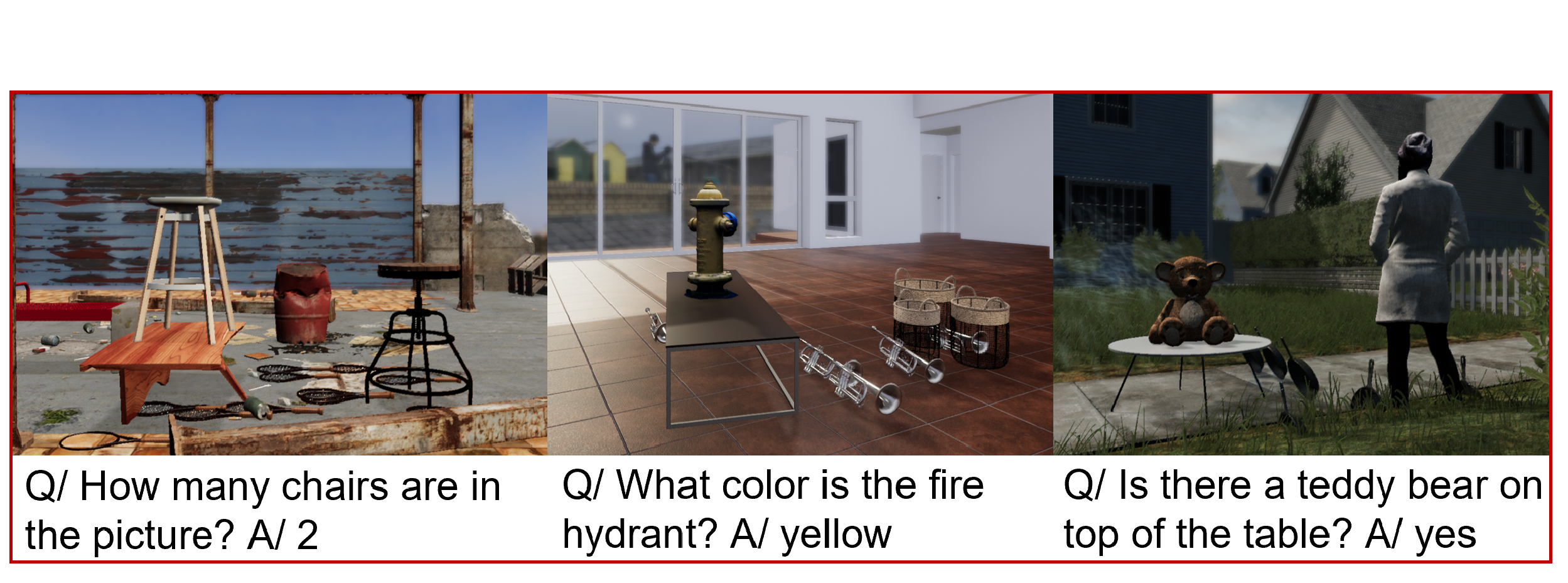

*Image samples from a subset of our TDW-VQA dataset.

**Search criteria for objects is an inclusive or, and FIFO.

It might take a few seconds to load the database for your first search.